How I analyzed the IoT industry to observe the tools and practices used to monitor and study commercial and open-source systems

Introduction

Industrial Automation is on the verge of adopting new technologies that optimize the processes of the existing industrial systems and use the data generated at the industry shop floor to make decisions in an online configuration thereby intelligently controlling the process. The concepts of Industry 4.0 have opened doors to many hardware and software configurations in the area of the Industrial Internet of Things (IIoT). In this regard, a system of commercial and proprietary systems compete with a community of open-source products that prove to be an economically viable alternative to the traditional systems.

I aimed to focus on such configurations based on the existing need in the industrial environment and suggest the best alternatives and options in this regard as well as to describe the components that make up an Industrial IoT system and the advantages over the traditional automation approach. In addition to this, the approach taken serves as a road map to the implementation of these alternatives in an industrial setting thereby reducing the setup time and overall cost of the industrial processes that involve sensor data acquisition and control.

IoT Startups Under a Microscope

I am based out of Edmonton, Alberta, so after doing some literary survey on the matter, I was ready to approach and observe the startup community in Edmonton and their approach used to tackle some far-reaching industrial problems. To observe the Edmonton tech ecosystem was to come to terms with the following facts :

- Fast-growing startups often stay at the bleeding edge of technology

- Well established organizations use proprietary software and hardware which cost about 3-4 times as much as the open-source counterparts. Emerging startups focus more on open-source alternatives

- Startups in the modern era are eager to adopt cloud infrastructure. This is prevalent since well-established companies often have to invest in additional Dev-Ops manpower to shift their legacy systems to the cloud.

- Some legacy monitoring tools from the previous generation of the industry require more investment, infrastructure, and more importantly time, to set up. Startups compete with each other to get their product to the market and therefore are more tie bound than well-established companies. They need accuracy as well as efficiency in both setting up the tools as well as using them daily.

Going Open Source

The open-source and software freedom movement started with the inception of the Free Software Foundation (FSF) under Richard Stallman, who first introduced the copyleft clause in software licensing. This allowed companies the freedom to use, modify, and distribute free and open-source software without offending any author beneficiaries.

A lot of open-source software has shaped the IoT industry over the years. IoT revolves around real-time processing. The Linux kernel, which is open source, can be operated in a realtime mode using PREEMPT_RT, also known as rt-Linux, which can be used to run the kernel of all of the edge computing devices in a traditional IoT service mesh.

Real-time processing calls for a real-time database. In my research I looked at TICK stack for IoT workflows:

- Telegraf: A metrics collection agent. It can be used to collect and send metrics to any time-series database. Telegraf’s plugin architecture supports the collection of metrics from 100+ popular services right out of the box.

- InfluxDB: It is a time-series database that supports streaming right out of the box. It has features like log rotation, and affinity, and can be connected to visualization tools using connectors.

- Chronograf: A UI layer for the whole TICK stack. It can be used to set up graphs and dashboards of data in InfluxDB and hook up Kapacitor alerts.

- Kapacitor: A metrics and events processing and alerting engine. It can be used to crunch time series data into actionable alerts and easily send those alerts to many popular products like PagerDuty and Slack.

The interesting part about the TICK stack is that any component in it can be replaced by another one that uses the same interfaces. People often use this to their benefit by adding custom dashboards instead of Chronograf, a popular choice being Grafana, which we will talk about later in this post.

These were some of the free and open-source solutions that people use. Now let us see how startups use a hybrid of both free and proprietary software and services to build the most optimal solution for IoT industry 4.0.

Going Cloud Native

Cloud computing started gaining a lot of popularity, especially after 2015. The figure below shows the distribution of cloud computing market revenues worldwide, from June 2015 to June 2019.

Three main names stand out of the study:

- Amazon Web Services (AWS)

- Google Cloud Platform (GCP)

- Microsoft Azure

All of these services offer PaaS (platform as a service) as of 2020, with some of them offering hybrid CaaS (container as a service) as well.

Such a boost in the market share of the multinational conglomerates was not seen since virtualization was introduced. This is because of one more key player in the industry: Containerization.

Containerization is a process virtualization technique that isolates running processes in their virtual environment which can utilize the underlying hardware without the need for a bridge. This is unlike a virtual machine where you create an operating system on top of another. Containerization services like docker have made it easy for both polyglot developers as well as cloud computing conglomerates to manage their services and have now entered the IoT market as well, especially since docker can now run on edge computing devices like Raspberry Pi’s and various other devices with ARM architecture

A Case Study

How I migrated an IoT system to Industry 4.0 standards.

Prelude

The IoT devices need to be connected in a network that is wide-ranging and has a low power demand. In this regard, a Low Power Wide Area Network (LPWAN) is used to allow long-range communications at a low bandwidth. This network can be thought of as possessing the range greater than a typical Local Area Network (LAN) but lower than a Cellular/GSM network.

Among these networks, LoRa(Long Range) and LoRaWAN (Long Range Wide Area Network) networks are used as open-licensed free spectrum networks. LoRa provides the device-to-infrastructure physical layer parameters and is maintained by the LoRa Alliance® which is an open association of collaborating members. The LoRa Alliance® describes LoRaWAN specification as ‘a Low Power Wide Area networking protocol designed to wirelessly connect battery operated things to the internet in regional, national or global networks, and targets key Internet of Things (IoT) requirements such as bi-directional communication, end to end security, mobility and localization services.

![LoRaWAN Information Flow [Lora Alliance official: https://lora-alliance.org/about-lorawan] lorawan](/images/screenshot-2020-06-23-at-1.04.32-pm.png)

LoRaWAN includes the frequency hopping capability allowing data transfers to occur across the bandwidth and in addition to this, it also includes the Data Rate setting (DR) allowing a trade-off between the message range and duration. The frequency range of LoRaWAN ranges from 0.3 kbps to 50 kbps. Officially, LoRaWAN has been deployed across 143 countries spanning 133 LoRaWAN network operators. Source: LA 2019 Annual Report

Cloud Migration

This edge computing system needed to be scaled according to the industry requirements of the particular project I was working on. This meant that we needed a cloud native scalable service to handle communication between IoT devices and solutions.

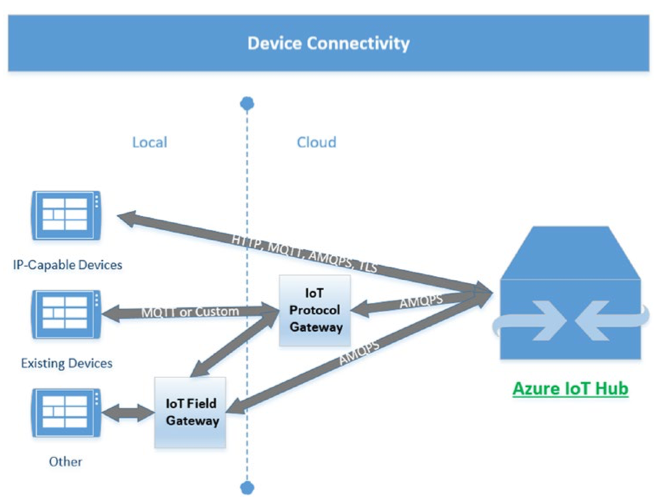

We needed a bi-directional communication service that manages device connectivity of various sensors and actuators and allows the connection of a large number of devices onto a single instance. But mostly, we required a reliable device-to-cloud and cloud-to-device messaging service with secure communication and event monitoring. Enter Microsoft Azure IoT HUB.

It seemingly claimed to solve all of our problems while migrating to the cloud. We decided on Message Queue Telemetry Transport protocol for our event sourcing and communication. MQTT bases the message transfer in a publisher and subscriber communication which is suitable for the sensors which need to send the data at a continuous rate. The connection between the IoT Hub and Gateway works on AMQPS protocol which is a request and response type communication method and hence optimizes the flow of data.

Once the IoT Hub is set up, the IoT devices can now be added which are specified by the unique Device ID. For each of these devices on the network, Azure can receive instances of the data payload as configured.

In addition to using the IoT HUB, we needed a virtual machine for our influxDB database as well as some edge computing that we were doing. It was very easy to set up a new VM in just a couple of clicks on Microsoft Azure. Using the ssh protocol, we were then able to install all of the software we needed.

Enter Containerization

We had to run a plethora of different software including things like:

- Grafana

- InfluxDB

- Azure IoT HUB Connector

- Custom Prediction Model for Alerts

This meant installing all of their dependancies on the host machine itself. We decided, instead, to go for containerization of the services in order to have runtime isolation between dependencies of all of the applications. Even our InfluxDB ran in a container.

Docker has its own DNS resolution technique, so just giving the container name in the database connection string in the Grafana plugin interface allowed us to connect it to our InfluxDB. In just under an hour, we were ready with our entire workflow and even had a real-time dashboard which was connected to our time series database.

Conclusion

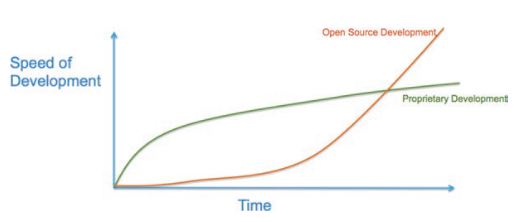

While the open-source community is in the growing stages and have a varied user base and community support, the proprietary tools and technology have a dedicated team that works on the tools and tech but with less flexibility of features. This means assigning dedicated resources to the project for achieving this. On the other hand if the open-source community takes note of a tool and finds it useful, the user base increases dramatically with more and more people engaged in developing and maintaining the tools. This is referred to as the crowdsourcing effect as detailed by ‘A. Rayes, S. Salam, Internet of Things From Hype to Reality’. The graph of the speed of development vs the time required to achieve the crowdsourcing in this regard is as shown in the graph.

This post focuses on the use of a mixture of proprietary and open source systems to achieve the best optimal solution to a hardware software connection problem in the current Industry 4.0 scenario and how it can be beneficial for the community at large to be more open to tools which could ease out on the setup, connection, troubleshooting and replacement time which the proprietary counterparts face.